Most of the initial reviews of Apple's iPhone shared one complaint in common: AT&T's EDGE network was slow, and it's the fastest cellular network the iPhone supported. In an interview with The Wall Street Journal, Steve Jobs explained Apple's rationale for not including 3G support in the initial iPhone:

"When we looked at 3G, the chipsets are not quite mature, in the sense that they're not low-enough power for what we were looking for. They were not integrated enough, so they took up too much physical space. We cared a lot about battery life and we cared a lot about physical size. Down the road, I'm sure some of those tradeoffs will become more favorable towards 3G but as of now we think we made a pretty good doggone decision."

The primary benefit of 3G support is obvious: faster data rates. Using dslreports.com's mobile speed test, we were able to pull an average of 100kbps off of AT&T's EDGE network as compared to 1Mbps on its 3G UMTS/WCDMA network.

Apple's stance is that the iPhone gives you a slower than 3G solution with EDGE, that doesn't consume a lot of power, and a faster than 3G solution with Wi-Fi when you're in range of a network. Our tests showed that on Wi-Fi, the iPhone was able to pull between 1 and 2Mbps, which is faster than what we got over UMTS but not tremendously faster. While we appreciate the iPhone's Wi-Fi support, the lightning quick iPhone interface makes those times that you're on EDGE feel even slower than on other phones. Admittedly it doesn't take too long to get used to, but we wanted to dig a little deeper and see what really kept 3G out of the iPhone.Pointing at size and power consumption, Steve gave us two targets to investigate. The space argument is an easy one to confirm, we cracked open the Samsung Blackjack and looked at its 3G UMTS implementation, powered by Qualcomm:

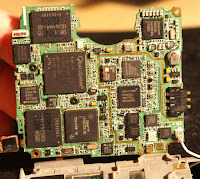

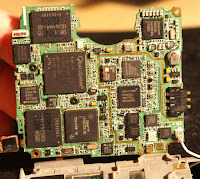

Motherboard Battle: iPhone (left) vs. Blackjack (right), only one layer of the iPhone'smotherboard is present

Motherboard Battle: iPhone (left) vs. Blackjack (right), only one layer of the iPhone'smotherboard is presentMr. Jobs indicated that integration was a limitation to bringing UMTS to the iPhone, so we attempted to identify all of the chips Apple used for its GSM/EDGE implementation (shown in purple) vs. what Samsung had to use for its Blackjack (shown in red):

The largest chip on both motherboards contains the multimedia engine which houses the modem itself, GSM/EDGE in the case of the iPhone's motherboard (left) and GSM/EDGE/UMTS in the case of the Blackjack's motherboard (right). The two smaller chips on the iPhone appear to be the GSM transmitter/receiver and the GSM signal amplifier. On the Blackjack, the chip in the lower left is a Qualcomm power management chip that works in conjunction with the larger multimedia engine we mentioned above. The two medium sized ICs in the middle appear to be the UMTS/EDGE transmitter/receivers, while the remaining chips are power amplifiers.

The iPhone would have to be a bit thicker, wider or longer to accommodate the same 3G UMTS interface that Samsung used in its Blackjack. Instead, Apple went with Wi-Fi alongside GSM - the square in green shows the Marvell 802.11b/g WLAN controller needed to enable Wi-Fi.

So the integration argument checks out, but what about the impact on battery life? In order to answer that question we looked at two smartphones - the Samsung Blackjack and Apple's iPhone. The Blackjack would be our 3G vs. EDGE testbed, while we'd look at the impact of Wi-Fi on power consumption using the iPhone.

They are known for having extremely reliable server power supplies, but recently Zippy has made the step into the retail desktop PSU market with several high class offerings. The Gaming G1 power supply in our last review exhibited very high quality, but it could still use quite a bit of improvement in order to better target the retail desktop PC market. Today we will be looking at the Serene 600W (GP2-5600V), a power supply that was built with the goal of having the best efficiency possible. The package claims 86%, which is quite a lofty goal for a retail product.

They are known for having extremely reliable server power supplies, but recently Zippy has made the step into the retail desktop PSU market with several high class offerings. The Gaming G1 power supply in our last review exhibited very high quality, but it could still use quite a bit of improvement in order to better target the retail desktop PC market. Today we will be looking at the Serene 600W (GP2-5600V), a power supply that was built with the goal of having the best efficiency possible. The package claims 86%, which is quite a lofty goal for a retail product.

We provided a series of reviews centered on the 775Dual-VSTA last year, which was the first board in the VIA PT880 based family of products from ASRock. That board was replaced by the 4CoreDual-VSTA last winter that brought with it a move from the PT880 Pro to the Ultra version of the chipset along with quad core compatibility. ASRock is now introducing the 4CoreDual-SATA2 board with the primary difference being a move from the VT8237A Southbridge to the VT8237S Southbridge that offers native SATA II compatibility.Our article today is a first look at this new board to determine if there are any performance differences between it and the 4CoreDual-VSTA in a few benchmarks that are CPU and storage system sensitive. We are not providing a full review of the board and its various capabilities at this time; instead this is a sneak peek to answer numerous reader questions surrounding any differences between the two boards.We will test DDR, AGP, and even quad core capabilities in our next article that will delve into the performance attributes of this board and several other new offerings from ASRock and others in the sub-$70 Intel market. While most people would not run a quad core processor in this board, it does have the capability and our Q6600 has been running stable now for a couple of weeks, though we have run across a couple of quirks that ASRock is working on. The reason we even mention this is that with Intel reducing the pricing on the Q6600 to the $260 range shortly, it might just mean the current users of the 4CoreDual series will want to upgrade CPUs without changing other components (yet). In the meantime, let's see if there are any initial differences between the two boards besides a new Southbridge.

We provided a series of reviews centered on the 775Dual-VSTA last year, which was the first board in the VIA PT880 based family of products from ASRock. That board was replaced by the 4CoreDual-VSTA last winter that brought with it a move from the PT880 Pro to the Ultra version of the chipset along with quad core compatibility. ASRock is now introducing the 4CoreDual-SATA2 board with the primary difference being a move from the VT8237A Southbridge to the VT8237S Southbridge that offers native SATA II compatibility.Our article today is a first look at this new board to determine if there are any performance differences between it and the 4CoreDual-VSTA in a few benchmarks that are CPU and storage system sensitive. We are not providing a full review of the board and its various capabilities at this time; instead this is a sneak peek to answer numerous reader questions surrounding any differences between the two boards.We will test DDR, AGP, and even quad core capabilities in our next article that will delve into the performance attributes of this board and several other new offerings from ASRock and others in the sub-$70 Intel market. While most people would not run a quad core processor in this board, it does have the capability and our Q6600 has been running stable now for a couple of weeks, though we have run across a couple of quirks that ASRock is working on. The reason we even mention this is that with Intel reducing the pricing on the Q6600 to the $260 range shortly, it might just mean the current users of the 4CoreDual series will want to upgrade CPUs without changing other components (yet). In the meantime, let's see if there are any initial differences between the two boards besides a new Southbridge.

Apple's stance is that the iPhone gives you a slower than 3G solution with EDGE, that doesn't consume a lot of power, and a faster than 3G solution with Wi-Fi when you're in range of a network. Our tests showed that on Wi-Fi, the iPhone was able to pull between 1 and 2Mbps, which is faster than what we got over UMTS but not tremendously faster. While we appreciate the iPhone's Wi-Fi support, the lightning quick iPhone interface makes those times that you're on EDGE feel even slower than on other phones. Admittedly it doesn't take too long to get used to, but we wanted to dig a little deeper and see what really kept 3G out of the iPhone.Pointing at size and power consumption, Steve gave us two targets to investigate. The space argument is an easy one to confirm, we cracked open the Samsung Blackjack and looked at its 3G UMTS implementation, powered by Qualcomm:

Apple's stance is that the iPhone gives you a slower than 3G solution with EDGE, that doesn't consume a lot of power, and a faster than 3G solution with Wi-Fi when you're in range of a network. Our tests showed that on Wi-Fi, the iPhone was able to pull between 1 and 2Mbps, which is faster than what we got over UMTS but not tremendously faster. While we appreciate the iPhone's Wi-Fi support, the lightning quick iPhone interface makes those times that you're on EDGE feel even slower than on other phones. Admittedly it doesn't take too long to get used to, but we wanted to dig a little deeper and see what really kept 3G out of the iPhone.Pointing at size and power consumption, Steve gave us two targets to investigate. The space argument is an easy one to confirm, we cracked open the Samsung Blackjack and looked at its 3G UMTS implementation, powered by Qualcomm: