Click

Thursday, September 27, 2007

RAID Primer: What's in a number?

With the increased use of computers in the daily lives of people worldwide, the dollar value of data stored on the average computer has steadily increased. Even as MTBF figures have moved from 8000 hours in the 1980s (example: MiniScribe M2006) to the current levels of over 750,000 hours (Seagate 7200.11 series drives), this increase in data value has offset the relative decrease of hard drive failures. The increase in the value of data, and the general unwillingness of most casual users to back up their hard drive contents on a regular basis, has put increasing focus on technologies which can help users to survive a hard drive failure. RAID (Redundant Array of Inexpensive Disks) is one of these technologies.

Drawing on whitepapers produced in the late 1970s, the term RAID was coined in 1987 by researchers at the University of California, Berkley in an effort to put in practice theoretical gains in performance and redundancy which could be made by teaming multiple hard drives in a single configuration. While their paper proposed certain levels of RAID, the practical needs of the IT industry have brought several slightly differing approaches. Most common now are:

RAID 0 - Data Striping

RAID 1 - Data Mirroring

RAID 5 - Data Striping with Parity

RAID 6 - Data Striping with Redundant Parity

RAID 0+1 - Data Striping with a Mirrored Copy

Each of these RAID configurations has its own benefits and drawbacks, and is targeted for specific applications. In this article we'll go over each and discuss in which situations RAID can potentially help - or harm - you as a user.

RAID Primer: What's in a number?

With the increased use of computers in the daily lives of people worldwide, the dollar value of data stored on the average computer has steadily increased. Even as MTBF figures have moved from 8000 hours in the 1980s (example: MiniScribe M2006) to the current levels of over 750,000 hours (Seagate 7200.11 series drives), this increase in data value has offset the relative decrease of hard drive failures. The increase in the value of data, and the general unwillingness of most casual users to back up their hard drive contents on a regular basis, has put increasing focus on technologies which can help users to survive a hard drive failure. RAID (Redundant Array of Inexpensive Disks) is one of these technologies.

Drawing on whitepapers produced in the late 1970s, the term RAID was coined in 1987 by researchers at the University of California, Berkley in an effort to put in practice theoretical gains in performance and redundancy which could be made by teaming multiple hard drives in a single configuration. While their paper proposed certain levels of RAID, the practical needs of the IT industry have brought several slightly differing approaches. Most common now are:

RAID 0 - Data Striping

RAID 1 - Data Mirroring

RAID 5 - Data Striping with Parity

RAID 6 - Data Striping with Redundant Parity

RAID 0+1 - Data Striping with a Mirrored Copy

Each of these RAID configurations has its own benefits and drawbacks, and is targeted for specific applications. In this article we'll go over each and discuss in which situations RAID can potentially help - or harm - you as a user.

HP Blackbird 002: Back in Black

Whether it's cars, aircrafts, houses, motorcycles, or computers people always seem to like hearing about the most exotic products on the planet. HP's latest and greatest desktop computer offering bears the name of one of the most mystical aircrafts of all time, the SR-71 Blackbird. We can't say for sure whether the choice of name actually comes from the famous surveillance aircraft or not, but we would venture to say this is the case. See, besides the name, the two have quite a few other common attributes.

Whether it's cars, aircrafts, houses, motorcycles, or computers people always seem to like hearing about the most exotic products on the planet. HP's latest and greatest desktop computer offering bears the name of one of the most mystical aircrafts of all time, the SR-71 Blackbird. We can't say for sure whether the choice of name actually comes from the famous surveillance aircraft or not, but we would venture to say this is the case. See, besides the name, the two have quite a few other common attributes.The SR-71 Blackbird was on the cutting edge of technology, pushing the boundaries of what was deemed achievable. It was the first aircraft that was designed to reduce its radar signature, and while it would fail in this respect it helped pave the way for future stealth aircraft. Perhaps more notably, the Blackbird was the fastest aircraft ever produced, officially reaching speeds of Mach 3.2 and unofficially reaching even higher. The actual top speed remains classified to this day. The extremely high speeds required some serious out of box thinking to achieve, so the Blackbird was built from flexible panels that actually fit loosely together at normal temperatures; only after the aircraft heated up from air friction would the panels fit snugly, and in fact the SR-71 would leak fuel while sitting on the runway before takeoff. After landing, the surface of the jet was so hot (above 300°C) that maintenance crews had to leave it alone for several hours to allow it to cool down.

So how does all of that relate to the HP Blackbird 002? In terms of components and design, the Blackbird is definitely on the cutting edge of design and technology, and it features several new "firsts" in computers. When we consider that the Blackbird comes from a large OEM that doesn't have a reputation for producing such designs, it makes some of these firsts even more remarkable. Talking about the temperatures that the SR-71 reached during flight was intentional, because the Blackbird 002 can put out a lot of heat. No, you won't need to let it cool down for several hours after running it, but the 1100W power supply is definitely put to good use. If electricity is the fuel of the 002, saying that it leaks fuel while sitting idle definitely wouldn't be an overstatement. And last but not least, the Blackbird 002 is fast - extremely fast - easily ranking among the best when it comes to prebuilt desktop computers.

Where did all of this come from? We are after all talking about HP, a company that has been in the computer business for decades, and during all that time they have never released anything quite like this. Flash back to about a year ago, when HP acquired VoodooPC, a boutique computer vendor known for producing extremely high-performance computers with exotic paint jobs and case designs - with an equally exotic price. The HP Blackbird 002 represents the first fruits of this merger, and while it may not be quite as exotic as the old VoodooPC offerings in all respects, it certainly blazes new trails in the world of OEM computers. There's clearly a passion for computer technology behind the design, and even if we might not personally be interested in purchasing such a computer, we can certainly appreciate all the effort that has gone into creating this latest "muscle car" - or pseudo-stealth aircraft, if you prefer.

Dell 2407WFP and 3007WFP LCD Comparison

Apple was one of the first companies to come out with very large LCDs with their Cinema Display line, catering to the multimedia enthusiasts that have often appreciated Apple's systems. Dell followed their lead when they launched the 24" 2405FPW several years ago, except that with their larger volumes they were able to offer competing displays at much more attractive prices. In short order, the 800 pound gorilla of business desktops and servers was able to occupy the same role in the LCD market. Of course, while many enthusiasts wouldn't be caught running a Dell system, the most recent Dell LCDs have been received very favorably by all types of users -- business, multimedia, and even gaming demands feel right at home on a Dell LCD. Does that mean that Dell LCDs are the best in the world? Certainly not, but given their price and ready worldwide availability, they have set the standard by which most other LCDs are judged.

In 2006, Dell launched their new 30" LCD, matching Apple's 30" Cinema Display for the largest commonly available computer LCD on the market. Dell also updated most of their other LCD sizes with the xx07 models, which brought improved specifications and features. These displays have all been available for a while now, but we haven't had a chance to provide reviews of them until now. As we renew our LCD and display coverage on AnandTech, and given the number of users that are already familiar with the Dell LCDs, we felt it was important to take a closer look at some of these Dell LCDs in order to help establish our baseline for future display reviews.

We recently looked at the gateway fpd our first LCD review in some time, and we compared it with the original Dell 24" LCD, the 2405FPW. In response to some comments and suggestions, we have further refined our LCD reviewing process and will be revisiting aspects of both of the previously tested displays. However, our primary focus is going to be on Dell's current 24" and 30" models, the 2407WFP and 3007WFP. How well do these LCDs perform, where do they excel, and where is there room for improvement? We aim to provide answers to those questions.

AMD's New Gambit: Open Source Video Drivers

As the computer hardware industry has matured, it has established itself in to a very regular and predictable pattern. Newer, faster hardware will come out, rivals will fire press releases back and forth showcasing that their product is the better one, price wars will break out, someone cheats now and then, someone comes up with an even more confusing naming scheme, etc. The fact of the matter is that in the computer hardware industry, there's very little that actually surprises us. We aren't psychic and can't predict when and to whom the above will happen to, but we can promise you that it will happen to someone and that it will happen again a couple of years after that. The computer hardware play book is well established and there's not much that goes on that deviates from it.

So we have to admit that we're more than a little surprised when AMD told us earlier this month that they intended to do something well outside of the play book and something that we thought was practically impossible: they were going to officially back and provide support for open source drivers for their video cards, in order to establish a solid full feature open source Linux video driver. The noteworthiness of this stems from the fact that the GPU industry is incredibly competitive and consequently incredibly secretive about plans and hardware. To allow for modern, functional open source video drivers to be made, a great deal of specifications must be released so that programmers may learn how to properly manipulate the hardware, and this flies in the face of the secretive nature of how NVIDIA and ATI go about their hardware and software development. Yet AMD is and has begun to take the steps required to pull this off, and we can't help but to be immediately befuddled by what's going on, nor can we ignore the implications of this.

Before we go any further however, we first should talk quickly about what has lead up to this, as there are a couple of issues that have directly lead to what AMD is attempting to do. We'll start with the Linux kernel and the numerous operating system distributions based upon it.

Unlike Windows and Mac OS X, the Linux kernel is not designed for use with binary drivers, that is drivers supplied pre-compiled by a vendor and plugged in to the operating system as a type of black box. While it's possible to make Linux work with such drivers, there are several roadblocks in doing so, among these being a lack of a stable application programming interface (API) for writing such drivers. The main Linux developers do not want to hinder the development of the kernel, but having a stable driver API would do just that by forcing them to avoid making any changes or improvements in that section of the code that would break the API. Furthermore by not supporting a stable driver API, it encourages device makers to release only open source drivers, in line with the open source philosophy of the Linux kernel itself.

This is in direct opposition to how AMD and NVIDIA prefer to operate, as their releasing of open source drivers would present a number of problems for them, chief among them exposing how parts of their hardware work when they want to keep that information secret. As a result both have released only binary drivers for their products, including their Linux drivers, and doing the best they can to work around any problems that the lack of a stable API may cause.

For a number of reasons, AMD's video drivers for Linux have been lackluster. NVIDIA has set the gold standard for the two, as their Linux drivers perform very close to their Windows drivers and are generally stable. Meanwhile AMD's drivers have performed half as well at times, and there have been several notable stability issues with their drivers. AMD's Linux drivers aren't by any means terrible (nor are NVIDIA's drivers perfect) but they're not nearly as good as they should be.

Meanwhile the poor quality of the binary drivers has as a result given AMD's graphics division a poor name in the open source community. While we have no reason to believe that this has significantly impacted AMD's sales since desktop usage of Linux is still low (and gaming even lower) it's still not a reputation AMD wants to have as it can eventually bleed over in to the general hardware and gaming communities.

This brings us back to the present, and what AMD has announced. AMD will be establishing a viable open source Linux driver for their X1K and HD2K series video cards, and will be continuing to provide their binary drivers simultaneously. AMD will not be providing any of their current driver code for use in the open source driver - this would break licensing agreements and reveal trade secrets - rather they want their open source driver built from the ground-up. Furthermore they will not be directly working on the driver themselves (we assume all of their on-staff programmers are "contaminated" from a legal point of view) and instead will be having the open source community build the drivers, with Novell's SuSE Linux division leading the effort.

With that said, their effort is just starting and there are a lot of things that must occur to make everything come together. AMD has done some of those things already, and many more will need to follow. Let's take a look at what those things are.

Saturday, September 15, 2007

Building a Better (Linux) GPU Benchmark

"The program computes frames per second for an application that uses OpenGL or SDL. It also takes screenshots periodically, and creates an overlay to display the current FPS/time."This is accomplished by defining a custom SwapBuffers function. For executables that are linked to GL at compile time, the LD_PRELOAD environment variable is used to invoke the custom SwapBuffers function. For executables that use run-time linking - which seems to be the case for most games - a copy of the binary is made, and all references to libGL and the original glXSwapBuffers function are replaced by references to our library and the custom SwapBuffers function. A similar procedure is done for SDL. We can then do all calculations on the frame buffer or simply dump the frame at will."You can read more about SDL and OpenGL. SDL is a "newer" library bundled with most recent Linux games (Medal of Honor: AA, Unreal Tournament 2004). In many ways, SDL behaves very similarly to DirectX for Linux, but utilizes OpenGL for 3D acceleration.

Building a Better (Linux) GPU Benchmark

"The program computes frames per second for an application that uses OpenGL or SDL. It also takes screenshots periodically, and creates an overlay to display the current FPS/time."This is accomplished by defining a custom SwapBuffers function. For executables that are linked to GL at compile time, the LD_PRELOAD environment variable is used to invoke the custom SwapBuffers function. For executables that use run-time linking - which seems to be the case for most games - a copy of the binary is made, and all references to libGL and the original glXSwapBuffers function are replaced by references to our library and the custom SwapBuffers function. A similar procedure is done for SDL. We can then do all calculations on the frame buffer or simply dump the frame at will."You can read more about SDL and OpenGL. SDL is a "newer" library bundled with most recent Linux games (Medal of Honor: AA, Unreal Tournament 2004). In many ways, SDL behaves very similarly to DirectX for Linux, but utilizes OpenGL for 3D acceleration.

Dell 2707WFP: Looking for the Middle Ground of Large LCDs

As you can see from the above table, the 27" LCDs currently boast the largest pixel pitch outside of HDTV offerings. However, the difference between a 15" or 19" pixel pitch and that of the 2707WFP is really quite small. If you're one of those that feel a slightly larger pixel pitch is preferable - for whatever reason - the 2707WFP doesn't disappoint. Dell has made some other changes relative to their other current LCD offerings, however, so let's take a closer look at this latest entrant into the crowded LCD market.

Low Power Server CPU Redux: Quad-Core Comes to Play

Wednesday, September 12, 2007

Optio X: A Look at Pentax's Ultra Thin 5MP Digicam

The Optio X certainly has a unique appearance with a twistable body and the slimmest in the Optio series. With a stylish black and silver body, the Optio X is one of Pentax's latest feature-packed 5 megapixel digicams. The Optio X features a 3x optical zoom lens and 15 different recording modes as well as movie and voice memo functionality. In addition, the camera offers advanced functions such as manual white balance and 5 different bracketing options. In our review of this camera, we discovered several strengths and weaknesses. For starters, it isn't the fastest camera available, but in most cases it puts in a decent performance. For example, it has respectable startup and write speeds. Unfortunately, the auto focus can be somewhat slow. In our test, the camera took nearly a full second to focus and take a picture. With respect to image quality, the Optio X generally does a good job taking even exposures with accurate color. However, we found clipped shadows and highlights in some of our samples in addition to jaggies along diagonal lines. Read on for more details of the ultra-thin Optio X.

The Optio X certainly has a unique appearance with a twistable body and the slimmest in the Optio series. With a stylish black and silver body, the Optio X is one of Pentax's latest feature-packed 5 megapixel digicams. The Optio X features a 3x optical zoom lens and 15 different recording modes as well as movie and voice memo functionality. In addition, the camera offers advanced functions such as manual white balance and 5 different bracketing options. In our review of this camera, we discovered several strengths and weaknesses. For starters, it isn't the fastest camera available, but in most cases it puts in a decent performance. For example, it has respectable startup and write speeds. Unfortunately, the auto focus can be somewhat slow. In our test, the camera took nearly a full second to focus and take a picture. With respect to image quality, the Optio X generally does a good job taking even exposures with accurate color. However, we found clipped shadows and highlights in some of our samples in addition to jaggies along diagonal lines. Read on for more details of the ultra-thin Optio X.ASUS P5N32-E SLI Plus: NVIDIA's 650i goes Dual x16

When the first 680i SLI motherboards were launched back in November they offered an incredible array of features and impressive performance to boot. However, all of this came at a significant price of $250 or more during the first month of availability. We thought additional competition from the non-reference board suppliers such as ASUS, abit, and Gigabyte would drive prices down over time. The opposite happened to a certain extent with the non-reference board suppliers, as prices zoomed above the $400 mark for boards like the ASUS Striker Extreme. The reference board design from suppliers such as EVGA and BFG has dropped to near $200 recently but we are still seeing $300 plus prices for the upper end ASUS and Gigabyte 680i boards.ASUS introduced the P5N32-E SLI board shortly after the Striker Extreme as a cost reduced version of that board in hopes of attracting additional customers. While this was a good decision, that board did not compete too well against the reference 680i boards in the areas of performance, features and cost. With necessity being the mother of all inventions, ASUS quickly went to work on a board design that would offer excellent quality and performance at a price point that was at least half that of the Striker Extreme.They could not get there at the time with the 680i SLI chipset and the recently released 680i LT SLI cost reduced chipset was not available so ASUS engineered their own version that would meet a market demand for a sub-$200 motherboard that offered the features and performance of the 680i chipset. They took the recently introduced 650i SLI SPP (C55) and paired it with the 570 SLI MCP (MCP55) utilized in the AMD 570/590 SLI product lines. ASUS called this innovative melding of an Intel SPP and AMD MCP based chipsets their Dual x16 Chipset with HybridUp Technology. Whatever you want to call it, we know it just flat out works and does so for around $185 as you will see in our test results shortly.

When the first 680i SLI motherboards were launched back in November they offered an incredible array of features and impressive performance to boot. However, all of this came at a significant price of $250 or more during the first month of availability. We thought additional competition from the non-reference board suppliers such as ASUS, abit, and Gigabyte would drive prices down over time. The opposite happened to a certain extent with the non-reference board suppliers, as prices zoomed above the $400 mark for boards like the ASUS Striker Extreme. The reference board design from suppliers such as EVGA and BFG has dropped to near $200 recently but we are still seeing $300 plus prices for the upper end ASUS and Gigabyte 680i boards.ASUS introduced the P5N32-E SLI board shortly after the Striker Extreme as a cost reduced version of that board in hopes of attracting additional customers. While this was a good decision, that board did not compete too well against the reference 680i boards in the areas of performance, features and cost. With necessity being the mother of all inventions, ASUS quickly went to work on a board design that would offer excellent quality and performance at a price point that was at least half that of the Striker Extreme.They could not get there at the time with the 680i SLI chipset and the recently released 680i LT SLI cost reduced chipset was not available so ASUS engineered their own version that would meet a market demand for a sub-$200 motherboard that offered the features and performance of the 680i chipset. They took the recently introduced 650i SLI SPP (C55) and paired it with the 570 SLI MCP (MCP55) utilized in the AMD 570/590 SLI product lines. ASUS called this innovative melding of an Intel SPP and AMD MCP based chipsets their Dual x16 Chipset with HybridUp Technology. Whatever you want to call it, we know it just flat out works and does so for around $185 as you will see in our test results shortly.Before we get to our initial performance results and discussion of the ASUS's P5N32-E SLI Plus hybrid board design we need to first explain the differences between it and the 680i/680i LT motherboards. In an interesting turn of events we find the recently introduced 680i LT SLI chipset also utilizing the nForce 570 SLI MCP with the new/revised 680i LT SPP while the 680i SLI chipset utilizes the nForce 590 SLI MCP and 680i SLI MCP.With the chipset designations out of the way, let's get to the real differences. All three designs officially support front-side bus speeds up to 1333MHz, so the upcoming Intel processors are guaranteed to work and each design offers very good to excellent overclocking capabilities with the 680i SLI offering the best overclocking rates to date in testing. Each board design also offers true dual x16 PCI Express slots for multi-GPU setups with ASUS designing the dual x8 capability on the 650i SPP as a single x16 setup with the second x16 slot capability being provided off the MCP as in the other solutions. We are still not convinced of the performance advantages of the dual x16 designs over the dual x8 offerings in typical gaming or application programs. We only see measurable differences between the two solutions once you saturate the bus bandwidth at 2560x1600 resolutions with ultra-high quality settings, and even then the performance differences are usually less than 5%.The 680i SLI and the ASUS hybrid 650i boards offer full support for Enhanced Performance Profile (SLI-Ready) memory at speeds up to 1200MHz with the 680i LT only offering official 800MHz support. However, this only means you will have to tweak the memory speed and timings yourself in the BIOS, something most enthusiasts do anyway. We had no issue running all three chipset designs at memory speeds up to 1275MHz when manually adjusting the timings.Other minor differences have the 680i SLI and ASUS Plus boards offering LinkBoost technology that has shown zero to very minimal performance gains in testing. Both boards also offer a third x16 physical slot that operates at x8 electrical to provide "physics capability" - something else that has not been introduced yet. Fortunately this slot can be used for PCI Express devices up to x8 speeds so it is not wasted. Each design also offers dual Gigabit Ethernet connections with DualNet technology and support for 10 USB devices. The 680i LT SLI design offers a single Gigabit Ethernet, support for 8 USB devices, and does not support LinkBoost or a third x16 physical slot.What we basically have is the ASUS hybrid design offering the same features as the 680i SLI chipset at a price point near that of the feature reduced 680i LT SLI setup. This leads us into today's performance review of the ASUS P5N32-E SLI Plus. In our article today we will go over the board layout and features, provide a few important performance results, and discuss our findings with the board. With that said, let's take a quick look at this hybrid solution and see how well it performs against the purebreds.

Gigabyte GA-P35T-DQ6: DDR3 comes a knocking, again

The recent introduction of the Intel's new P35 chipset brought with it the official introduction of the 1333FSB and DDR3 support for Intel processors. The P35 chipset is also the first chipset to officially support the upcoming 45nm CPU architecture. We reviewed the P35 chipset and the new Intel ICH9 Southbridge in detail and found the combination to offer one of the best, if not the best, performance platforms for Intel's Core 2 Duo family of processors.This does not necessarily mean the P35 is the fastest chipset on paper or by pure design; it's just that the current implementation of this "wünder" chip by the motherboard manufacturers has provided us with the overall top performing chipset in the Intel universe at this time. Of course this could change at a moment's notice based upon new BIOS or chipset releases, but the early maturity and performance levels of the P35 has surprised us.Our first look at DDR3 technology provides a glimpse of where memory technology is headed for the next couple of years. We do not expect widespread support for DDR3 until sometime in 2008 but with the right DDR3 modules we have seen performance equaling or bettering that of current DDR2 platforms. However, this does not mean DDR2 memory technology is stagnant now; far from it, as we will soon see standard DDR2-1066 modules with fairly low latencies running at 1.8V with overclocking capabilities up to or exceeding DDR2-1500 in some cases.What immediate impact this will have on the DDR3 memory market is unclear right now. Based on our early information we should see the more performance oriented DDR2 motherboards outperforming their DDR3 counterparts until DDR3 latencies and speeds are greatly improved. We do expect these improvements to come, just not quick enough to hold off the initial onslaught of DDR2-1066 and what is shaping up to be some impressive overclocking capabilities.

The recent introduction of the Intel's new P35 chipset brought with it the official introduction of the 1333FSB and DDR3 support for Intel processors. The P35 chipset is also the first chipset to officially support the upcoming 45nm CPU architecture. We reviewed the P35 chipset and the new Intel ICH9 Southbridge in detail and found the combination to offer one of the best, if not the best, performance platforms for Intel's Core 2 Duo family of processors.This does not necessarily mean the P35 is the fastest chipset on paper or by pure design; it's just that the current implementation of this "wünder" chip by the motherboard manufacturers has provided us with the overall top performing chipset in the Intel universe at this time. Of course this could change at a moment's notice based upon new BIOS or chipset releases, but the early maturity and performance levels of the P35 has surprised us.Our first look at DDR3 technology provides a glimpse of where memory technology is headed for the next couple of years. We do not expect widespread support for DDR3 until sometime in 2008 but with the right DDR3 modules we have seen performance equaling or bettering that of current DDR2 platforms. However, this does not mean DDR2 memory technology is stagnant now; far from it, as we will soon see standard DDR2-1066 modules with fairly low latencies running at 1.8V with overclocking capabilities up to or exceeding DDR2-1500 in some cases.What immediate impact this will have on the DDR3 memory market is unclear right now. Based on our early information we should see the more performance oriented DDR2 motherboards outperforming their DDR3 counterparts until DDR3 latencies and speeds are greatly improved. We do expect these improvements to come, just not quick enough to hold off the initial onslaught of DDR2-1066 and what is shaping up to be some impressive overclocking capabilities.That brings us to today's discussion of the Gigabyte GA-P35T-DQ6 motherboard based upon the Intel P35 chipset with full DDR3 compatibility. This motherboard is Gigabyte's current flagship product and we expect the product to launch on or right after June 4th. Gigabyte was kind enough to provide us with a full retail kit for our preview article today.The P35T-DQ6 motherboard is based on the same platform utilized by its DDR2 counterpart, the P35-DQ6, which has already provided an excellent performance alternative to the ASUS P5K series of motherboards. As we stated in our preview article, making a choice between the current P35 motherboards is difficult and is largely dependent upon the user's requirements.We initially found the ASUS boards to be slightly more mature, as they offer a performance oriented BIOS with some additional fine tuning options not available in the other boards. However, that is quickly changing as we receive BIOS updates and new board designs from other manufacturers. We will provide an answer for what board we think best exemplifies the performance and capability of the P35 chipset in our roundup coming in the latter part of June.In the meantime, we have our second DDR3 board in-house for testing and will provide some early results with this somewhat unique motherboard that brings an excellent level of performance to the table. The question remains if this board can outperform the ASUS P5K3 Deluxe, and we hope to provide some early answers to that question today. Let's take a quick glimpse at the Gigabyte GA-P35T-DQ6 now and see how it performs.

Sunday, September 9, 2007

Canon Digital Rebel XT: Hardly an Entry-Level DSLR

In our review, we found that the 350D acts nothing like an entry-level camera. It has an instant startup time just like the 20D and its cycle/write times are nearly identical. The 350D is capable of capturing extraordinary detail and offers several parameters to adjust the in-camera processing levels. In our noise test, the 350D shows impressive noise control and produces surprisingly clean images throughout the ISO range. Read on for a full review of this remarkable camera to see why it might be your first digital SLR.

Saturday, September 8, 2007

Toshiba Satellite X205-S9359 Take Two: Displays and Drivers

Jumping right into the thick of things, let's take a moment to discuss preinstalled software. Most OEMs do it, and perhaps there are some users out there that actually appreciate all of the installed software. Some of it can be truly useful, for instance applications that let you watch high-definition movies on the included HD DVD drive. If you don't do a lot of office work, Microsoft Works is also sufficient for the basics, although most people should probably just give in and purchase a copy of Microsoft Office. We're still a bit torn about some of the UI changes in Office 2007, but whether you choose the new version or stick with the older Office 2003 the simple fact of the matter is that most PCs need a decent office suite. The standard X205 comes with a 60 day trial version of Office 2007 installed, however, which is unlikely to satisfy the needs of most users. If you don't need Office and will be content with Microsoft Works, there's no need to have a 60 day trial. Conversely, if you know you need Microsoft Office there's no need to have Works installed and you would probably like the option to go straight to the full version of Office 2007.There is an option to purchase Microsoft Office Home and Student 2007 for $136, but it's not entirely clear if that comes in a separate box or if the software gets preinstalled - and if it's the latter, hopefully Microsoft Works gets removed as well. As far as we can tell, Toshiba takes a "one-size-fits-all" approach to software, and we would really appreciate a few more options. In fact, what we would really appreciate is the ability to not have a bunch of extra software installed.

Jumping right into the thick of things, let's take a moment to discuss preinstalled software. Most OEMs do it, and perhaps there are some users out there that actually appreciate all of the installed software. Some of it can be truly useful, for instance applications that let you watch high-definition movies on the included HD DVD drive. If you don't do a lot of office work, Microsoft Works is also sufficient for the basics, although most people should probably just give in and purchase a copy of Microsoft Office. We're still a bit torn about some of the UI changes in Office 2007, but whether you choose the new version or stick with the older Office 2003 the simple fact of the matter is that most PCs need a decent office suite. The standard X205 comes with a 60 day trial version of Office 2007 installed, however, which is unlikely to satisfy the needs of most users. If you don't need Office and will be content with Microsoft Works, there's no need to have a 60 day trial. Conversely, if you know you need Microsoft Office there's no need to have Works installed and you would probably like the option to go straight to the full version of Office 2007.There is an option to purchase Microsoft Office Home and Student 2007 for $136, but it's not entirely clear if that comes in a separate box or if the software gets preinstalled - and if it's the latter, hopefully Microsoft Works gets removed as well. As far as we can tell, Toshiba takes a "one-size-fits-all" approach to software, and we would really appreciate a few more options. In fact, what we would really appreciate is the ability to not have a bunch of extra software installed.  Above is a snapshot of Windows Vista's Add/Remove Programs tool for the X205 we received, prior to installing or uninstalling anything. The vast majority of the extra software is what we would kindly classify as "junk". All of it is free and easily downloadable for those who are actually interested in having things like Wild Tangent games on their computer. We prefer to run a bit leaner in terms of software, so prior to conducting most of our benchmarks we of course had to uninstall a lot of software, which took about an hour and several reboots. If this laptop is in fact intended for the "gamer on the go", which seems reasonable, we'd imagine that most gamers would also prefer to get a cleaner default system configuration. Alienware did an excellent job in this area, and even Dell does very well on their XPS line. For now, Toshiba holds the record for having the most unnecessary software preinstalled.

Above is a snapshot of Windows Vista's Add/Remove Programs tool for the X205 we received, prior to installing or uninstalling anything. The vast majority of the extra software is what we would kindly classify as "junk". All of it is free and easily downloadable for those who are actually interested in having things like Wild Tangent games on their computer. We prefer to run a bit leaner in terms of software, so prior to conducting most of our benchmarks we of course had to uninstall a lot of software, which took about an hour and several reboots. If this laptop is in fact intended for the "gamer on the go", which seems reasonable, we'd imagine that most gamers would also prefer to get a cleaner default system configuration. Alienware did an excellent job in this area, and even Dell does very well on their XPS line. For now, Toshiba holds the record for having the most unnecessary software preinstalled.

Gateway FX530: Mad Cows and Quad Core Overclocking

A Messy Transition (Part 3): Vista Buys Some Time

As we saw in part 1 of this series, large applications and games under Windows are getting incredibly close to hitting the 2GB barrier, the amount of virtual address space a traditional Win32 (32-bit) application can access. Once applications begin to hit these barriers, many of them will start acting up and/or crashing in unpredictable ways which makes resolving the problem even harder. Developers can work around these issues, but none of these except for building less resource intensive games or switching to 64-bit will properly solve the problem without creating other serious issues.

Furthermore, as we saw in part 2, games are consuming greater amounts of address space under Windows Vista than Windows XP. This makes Vista less suitable for use with games when at the same time it will be the version of Windows that will see the computing industry through the transition to 64-bit operating systems becoming the new standard. Microsoft knew about the problem, but up until now we were unable to get further details on what was going on and why. As of today that has changed.

Microsoft has published knowledge base article 940105 on the matter, and with it has finalized a patch to reduce the high virtual address space usage of games under Vista. From this and our own developer sources, we can piece together the problem that was causing the high virtual address space issues under Vista.

As it turns out, our initial guess about the issue being related to memory allocations being limited to the 2GB of user space for security reasons was wrong, the issue is simpler than that. One of the features of the Windows Vista Display Driver Model (WDDM) is that video memory is no longer a limited-sharing resource that applications will often take complete sovereign control of; instead the WDDM offers virtualization of video memory so that all applications can use what they think is video memory without needing to actually care about what else is using it - in effect removing much of the work of video memory management from the application. From both a developer's and user's perspective this is great as it makes game/application development easier and multiple 3D accelerated applications get along better, but it came with a cost.

All of that virtualization requires address space to work with; Vista uses an application's 2GB user allocation of virtual address space for this purpose, scaling the amount of address space consumed by the WDDM with the amount of video memory actually used. This feature is ahead of its time however as games and applications written to the DirectX 9 and earlier standards didn't have the WDDM to take care of their memory management, so applications did it themselves. This required the application to also allocate some virtual address space to its management tasks, which is fine under XP.

However under Vista this results in the application and the WDDM effectively playing a game of chicken: both are consuming virtual address space out of the same 2GB pool and neither is aware of the other doing the exact same thing. Amusingly, given a big enough card (such as a 1GB Radeon X2900XT), it's theoretically possible to consume all 2GB of virtual address space under Vista with just the WDDM and the application each trying to manage the video memory, which would leave no further virtual address space for anything else the application needs to do. In practice, both the virtual address space allocations for the WDDM and the application video memory manager attempt to grow as needed, and ultimately crash the application as each starts passing 500MB+ of allocated virtual address space.

This obviously needed to be fixed, and for a multitude of reasons (such as Vista & XP application compatibility) such a fix needed to be handled by the operating system. That fix is KB940105, which is a change to how the WDDM handles its video memory management. Now the WDDM will not default to using its full memory management capabilities, and more importantly it will not be consuming virtual address space unless specifically told to by the application. This will significantly reduce the virtual address space usage of an application when video memory is the culprit, but at best it will only bring Vista down to the kind of virtual address space usage of XP.

Laptop LCD Roundup: Road Warriors Deserve Better

Killing the Business Desktop PC Softly

Silver Power Blue Lightning 600W

The Tagan brand was established to focus more on the high-end gamers and enthusiasts, where quality is the primary concern and price isn't necessarily a limiting factor. Silver Power takes a slightly different route, expanding the product portfolio into the more cost-conscious markets. Having diverse product lines that target different market segments is often beneficial for a company, though of course the real question is whether or not Silver Power can deliver good quality for a reduced price.

We were sent their latest model, the SP-600 A2C "Blue Lightning" 600W, power supply for testing. This PSU delivers 24A on the 3.3V rail and 30A on the 5V rail, which is pretty average for a 600W power supply. In keeping with the latest power supply guidelines, the 12V power is delivered on two rails each capable of providing up to 22A. However, that's the maximum power each 12V rail can deliver; the total combined power capable of being delivered on the 3.3V, 5V, and 12V rails is 585W, and it's not clear exactly how much of that can come from the 12V rails which are each theoretically capable of delivering up to 264W each.

RAID Primer: What's in a number?

OCZ Introduces DDR3-1800

OCZ Introduces DDR3-1800

µATX Overview: Prelude to a Roundup

Thursday, September 6, 2007

Zippy Serene (GP2-5600V)

They are known for having extremely reliable server power supplies, but recently Zippy has made the step into the retail desktop PSU market with several high class offerings. The Gaming G1 power supply in our last review exhibited very high quality, but it could still use quite a bit of improvement in order to better target the retail desktop PC market. Today we will be looking at the Serene 600W (GP2-5600V), a power supply that was built with the goal of having the best efficiency possible. The package claims 86%, which is quite a lofty goal for a retail product.

They are known for having extremely reliable server power supplies, but recently Zippy has made the step into the retail desktop PSU market with several high class offerings. The Gaming G1 power supply in our last review exhibited very high quality, but it could still use quite a bit of improvement in order to better target the retail desktop PC market. Today we will be looking at the Serene 600W (GP2-5600V), a power supply that was built with the goal of having the best efficiency possible. The package claims 86%, which is quite a lofty goal for a retail product.

As we have seen many times with other power supplies, the Serene comes with a single 12V rail. We have written previously that this does not conform to the actual Intel Power Supply Design Guidelines, but as we have seen, readers and manufacturers have a different opinion about this issue. While some say it is no problem at all - there will be enough safety features that will kick in before something bad happens, i.e. overloading the power supply - the other half prefers to stick to the rules and have released power supplies with up to six 12V rails. While the lower voltage rails have each 25A on disposal, the single 12V rail has 40A and should have no difficulty powering everything a decent system needs.Given the name, one area that will be of particular interest to us is how quiet this power supply manages to run. Granted, delivering a relatively silent power supply that provides 600W is going to be a bit easier than making a "silent" 1000W power supply, but we still need to determine whether or not the Zippy Serene can live up to its name.

ASRock 4CoreDual-SATA2: Sneak Peek

We provided a series of reviews centered on the 775Dual-VSTA last year, which was the first board in the VIA PT880 based family of products from ASRock. That board was replaced by the 4CoreDual-VSTA last winter that brought with it a move from the PT880 Pro to the Ultra version of the chipset along with quad core compatibility. ASRock is now introducing the 4CoreDual-SATA2 board with the primary difference being a move from the VT8237A Southbridge to the VT8237S Southbridge that offers native SATA II compatibility.Our article today is a first look at this new board to determine if there are any performance differences between it and the 4CoreDual-VSTA in a few benchmarks that are CPU and storage system sensitive. We are not providing a full review of the board and its various capabilities at this time; instead this is a sneak peek to answer numerous reader questions surrounding any differences between the two boards.We will test DDR, AGP, and even quad core capabilities in our next article that will delve into the performance attributes of this board and several other new offerings from ASRock and others in the sub-$70 Intel market. While most people would not run a quad core processor in this board, it does have the capability and our Q6600 has been running stable now for a couple of weeks, though we have run across a couple of quirks that ASRock is working on. The reason we even mention this is that with Intel reducing the pricing on the Q6600 to the $260 range shortly, it might just mean the current users of the 4CoreDual series will want to upgrade CPUs without changing other components (yet). In the meantime, let's see if there are any initial differences between the two boards besides a new Southbridge.

We provided a series of reviews centered on the 775Dual-VSTA last year, which was the first board in the VIA PT880 based family of products from ASRock. That board was replaced by the 4CoreDual-VSTA last winter that brought with it a move from the PT880 Pro to the Ultra version of the chipset along with quad core compatibility. ASRock is now introducing the 4CoreDual-SATA2 board with the primary difference being a move from the VT8237A Southbridge to the VT8237S Southbridge that offers native SATA II compatibility.Our article today is a first look at this new board to determine if there are any performance differences between it and the 4CoreDual-VSTA in a few benchmarks that are CPU and storage system sensitive. We are not providing a full review of the board and its various capabilities at this time; instead this is a sneak peek to answer numerous reader questions surrounding any differences between the two boards.We will test DDR, AGP, and even quad core capabilities in our next article that will delve into the performance attributes of this board and several other new offerings from ASRock and others in the sub-$70 Intel market. While most people would not run a quad core processor in this board, it does have the capability and our Q6600 has been running stable now for a couple of weeks, though we have run across a couple of quirks that ASRock is working on. The reason we even mention this is that with Intel reducing the pricing on the Q6600 to the $260 range shortly, it might just mean the current users of the 4CoreDual series will want to upgrade CPUs without changing other components (yet). In the meantime, let's see if there are any initial differences between the two boards besides a new Southbridge.

Apple TV - Part 2: Apple Enters the Digital Home

Microsoft would be quite happy with that assessment but there’s one key distinction: PC does not have to mean Windows PC, it could very well mean a Mac. Both Microsoft and Apple have made significant headway into fleshing out the digital home. Microsoft’s attempts have been more pronounced; the initial release of Windows XP Media Center Edition was an obvious attempt at jump starting the era of the digital home. Microsoft’s Xbox 360 and even Windows Vista are both clear attempts to give Microsoft a significant role in the digital home. Microsoft wants you to keep your content on a Vista PC, whether it be music or movies or more, and then stream it to an Xbox 360 or copy it to a Zune to take it with you.

Apple’s approach, to date, has been far more subtle. While the iPod paved a crystal clear way for you to take your content with you, Apple had not done much to let you move your content around your home. If you have multiple computers running iTunes you can easily share libraries, but Apple didn’t apply its usual elegant simplicity to bridging the gap between your computer and your TV; Apple TV is the product that aims to change that.

Apple TV is nothing more than Apple’s attempt at a digital media extender, a box designed to take content from your computer and make it accessible on a TV. As Microsoft discovered with Media Center, you need a drastically different user interface if you're going to be connected to a TV. Thus the (expensive) idea of simply hooking your computer up to your TV died and was replaced with a much better alternative: keep your computer in place and just stream content from it to dumb terminals that will display it on a TV, hence the birth of the media extender. Whole-house networking became more popular, and barriers were broken with the widespread use of wireless technologies, paving the way for networked media extenders to enter the home.

The problem is that most of these media extenders were simply useless devices. They were either too expensive or too restrictive with what content you could play back on them. Then there were the usual concerns about performance and UI, not to mention compatibility with various platforms.

Microsoft has tried its hands at the media extender market, the latest attempt being the Xbox 360. If you've got Vista or XP Media Center Edition, the Xbox 360 can act like a media extender for content stored on your PC. With an installed user base of over 10 million, it's arguably the most pervasive PC media extender currently available. But now it's Apple's try.

Skeptics are welcome, as conquering the media extender market is not as easy as delivering a simple UI. If that's all it took we'd have a lot of confidence in Apple, but the requirements for success are much higher here. Believe it or not, but the iPod's success was largely due to the fact that you could play both legal and pirated content on it; the success of the iTunes Store came after the fact.

The iPod didn't discriminate, if you had a MP3 it'd play it. Media extenders aren't as forgiving, mostly because hardware makers are afraid of the ramifications of building a device that is used predominantly for pirated content. Apple, obviously with close ties to content providers, isn't going to release something that is exceptionally flexible (although there is hope for the unit from within the mod community). Apple TV will only play H.264 or MPEG-4 encoded video, with bit rate, resolution and frame rate restrictions (we'll get into the specifics later); there's no native support for DivX, XviD, MPEG-2 or WMV.

Already lacking the the ability to play all of your content, is there any hope for Apple TV or will it go down in history as another Apple product that just never caught on?

Dual Core Linux Performance: Two Penguins are Better than One

But does any of this translate to great desktop performance for dual core processors? We are going to look at that question today while also determining whether Intel or AMD is the better suited contender for the Linux desktop. We have some slightly non-traditional (but very replicable) tests we plan on running today that should demonstrate the strengths of each processor family as well as the difference between some similar Windows tests that we have performed in the past on similar configurations. Ultimately, we would love to see a Linux configuration perform the same task as a Windows machine but faster.

Just to recap, the scope of today's exploration will be to determine which configuration offers the best performance per buck on Linux, and whether or not any of these configurations out perform similar Windows machines running similar benchmarks. It becomes real easy to lose the scope of the analysis otherwise. We obtained some reasonably priced dual core Intel and AMD processors for our benchmarks today, and we will also throw in some benchmarks of newer single core chips to give some point of reference.

AMD's Opteron hits 3.2GHz

"AMD has no answer to the armada of new Intel's CPUs.""Penryn will be the final blow."These two sentences have been showing up on a lot of hardware forums around the Internet. The situation in the desktop is close to desperate for AMD as it can hardly keep pace with the third highest clocked Core 2 Duo CPU, and there are several quad core chips - either high clocked expensive ones or cheaper midrange models - that AMD simply has no answer for at present. As AMD gets closer to the launch of their own quad core, even at a humble 2GHz, Intel let the world know it will deliver a 3GHz quad core Xeon with 12 MB L2 that only needs 80W, and Intel showed that 3.33GHz is just around the corner too. However, there is a reason why Intel is more paranoid than the many hardware enthusiasts.While most people focus on the fact that Intel's Core CPUs win almost every benchmark in the desktop space, the battle in the server space is far from over. Look at the four socket market for example, also called the 4S space. As we showed in our previous article, the fastest Xeon MP at 3.4GHz is about as fast as the Opteron at 2.6GHz. Not bad at all, but today AMD introduces a 3.2GHz Opteron 8224, which extends AMD's lead in the 4S space. This lead probably won't last for long, as Intel is very close to introducing its newest quad core Xeon MP Tigerton line, but it shows that AMD is not throwing in the towel. Along with the top-end 3.2GHz 8224 (120W), a 3GHz 8222 at 95W, 3.2GHz Opteron 2224 (120W) and 3GHz 2222 (95W) are also being introduced.The 3.2GHz Opteron 2224 is quite interesting, as it is priced at $873. This is the same price point as the dual core Intel Xeon 5160 at 3GHz and the quad core Intel Xeon 5355. The contrast with the desktop market is sharp: not one AMD desktop CPU can be found in the higher price ranges. So how does AMD's newest offering compare to the two Intel CPUs? Is it just an attempt at deceiving IT departments into thinking the parts are comparable, or does AMD have an attractive alternative to the Intel CPUs?

HD Video Decode Quality and Performance Summer '07

While the R600 based Radeon HD 2900 XT only supports the features listed as "Avivo", G84 and G86 based hardware comprise the Avivo HD feature set (100% GPU offload) for all but VC-1 decoding (where decode support is the same as the HD 2900 XT, lacking only bitstream processing). With software and driver support finally coming up to speed, we will begin to be able to answer the questions that fill in the gaps with the quality and efficacy of AMD and NVIDIA's mainstream hardware. These new parts are sorely lacking in 3D performance, and we've been very disappointed with what they've had to offer. Neither camp has yet provided a midrange solution that bridges the gap between cost effective and acceptable gaming performance (especially under current DX10 applications). Many have claimed that HTPC and video enthusiasts will be able to find value in low end current generation hardware. We will certainly address this issue as well.

No 3G on the iPhone, but why? A Battery Life Analysis

Most of the initial reviews of Apple's iPhone shared one complaint in common: AT&T's EDGE network was slow, and it's the fastest cellular network the iPhone supported. In an interview with The Wall Street Journal, Steve Jobs explained Apple's rationale for not including 3G support in the initial iPhone:

Most of the initial reviews of Apple's iPhone shared one complaint in common: AT&T's EDGE network was slow, and it's the fastest cellular network the iPhone supported. In an interview with The Wall Street Journal, Steve Jobs explained Apple's rationale for not including 3G support in the initial iPhone:"When we looked at 3G, the chipsets are not quite mature, in the sense that they're not low-enough power for what we were looking for. They were not integrated enough, so they took up too much physical space. We cared a lot about battery life and we cared a lot about physical size. Down the road, I'm sure some of those tradeoffs will become more favorable towards 3G but as of now we think we made a pretty good doggone decision."

The primary benefit of 3G support is obvious: faster data rates. Using dslreports.com's mobile speed test, we were able to pull an average of 100kbps off of AT&T's EDGE network as compared to 1Mbps on its 3G UMTS/WCDMA network.

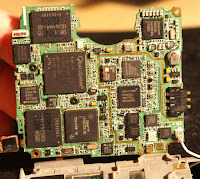

Apple's stance is that the iPhone gives you a slower than 3G solution with EDGE, that doesn't consume a lot of power, and a faster than 3G solution with Wi-Fi when you're in range of a network. Our tests showed that on Wi-Fi, the iPhone was able to pull between 1 and 2Mbps, which is faster than what we got over UMTS but not tremendously faster. While we appreciate the iPhone's Wi-Fi support, the lightning quick iPhone interface makes those times that you're on EDGE feel even slower than on other phones. Admittedly it doesn't take too long to get used to, but we wanted to dig a little deeper and see what really kept 3G out of the iPhone.Pointing at size and power consumption, Steve gave us two targets to investigate. The space argument is an easy one to confirm, we cracked open the Samsung Blackjack and looked at its 3G UMTS implementation, powered by Qualcomm:

Apple's stance is that the iPhone gives you a slower than 3G solution with EDGE, that doesn't consume a lot of power, and a faster than 3G solution with Wi-Fi when you're in range of a network. Our tests showed that on Wi-Fi, the iPhone was able to pull between 1 and 2Mbps, which is faster than what we got over UMTS but not tremendously faster. While we appreciate the iPhone's Wi-Fi support, the lightning quick iPhone interface makes those times that you're on EDGE feel even slower than on other phones. Admittedly it doesn't take too long to get used to, but we wanted to dig a little deeper and see what really kept 3G out of the iPhone.Pointing at size and power consumption, Steve gave us two targets to investigate. The space argument is an easy one to confirm, we cracked open the Samsung Blackjack and looked at its 3G UMTS implementation, powered by Qualcomm:

Motherboard Battle: iPhone (left) vs. Blackjack (right), only one layer of the iPhone's

motherboard is present

Mr. Jobs indicated that integration was a limitation to bringing UMTS to the iPhone, so we attempted to identify all of the chips Apple used for its GSM/EDGE implementation (shown in purple) vs. what Samsung had to use for its Blackjack (shown in red):

The largest chip on both motherboards contains the multimedia engine which houses the modem itself, GSM/EDGE in the case of the iPhone's motherboard (left) and GSM/EDGE/UMTS in the case of the Blackjack's motherboard (right). The two smaller chips on the iPhone appear to be the GSM transmitter/receiver and the GSM signal amplifier. On the Blackjack, the chip in the lower left is a Qualcomm power management chip that works in conjunction with the larger multimedia engine we mentioned above. The two medium sized ICs in the middle appear to be the UMTS/EDGE transmitter/receivers, while the remaining chips are power amplifiers.

The iPhone would have to be a bit thicker, wider or longer to accommodate the same 3G UMTS interface that Samsung used in its Blackjack. Instead, Apple went with Wi-Fi alongside GSM - the square in green shows the Marvell 802.11b/g WLAN controller needed to enable Wi-Fi.

So the integration argument checks out, but what about the impact on battery life? In order to answer that question we looked at two smartphones - the Samsung Blackjack and Apple's iPhone. The Blackjack would be our 3G vs. EDGE testbed, while we'd look at the impact of Wi-Fi on power consumption using the iPhone.